2020-02-27 12:28:10

Preliminaries

Today’s agenda

- The values of science

- Learning from cases of misconduct

- Is there a crisis of reproducibility?

The values of science–problems and solutions

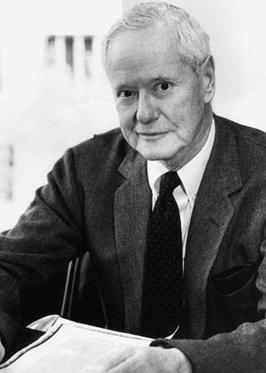

Robert Merton

CUDOS

- communalism: common ownership of scientific goods (intellectual property)

- universalism: scientific validity is independent of sociopolitical status/personal attributes of its participants

- disinterestedness: scientific institutions benefit a common scientific enterprise, not specific individuals

- organized skepticism: claims should be exposed to critical scrutiny before being accepted

Evidence for endorsement in a scientific community

Does psychology have a toothbrush problem?

“…psychologists tend to treat other peoples’ theories like toothbrushes; no self-respecting individual wants to use anyone else’s.”

“The toothbrush culture undermines the building of a genuinely cumulative science, encouraging more parallel play and solo game playing, rather than building on each other’s directly relevant best work.”

An ethical duty to share?

“…the principles of human subject research require an analysis of both risks and benefits…such an analysis suggests that researchers may have a positive duty to share data in order to maximize the contribution that individual participants have made.”

Transparency and openness as essential scientific values

“We regard scientific integrity, transparency, and openness as essential for the conduct of research and its application to practice and policy…”

“SRCD holds that highlighting integrity, transparency, and openness as core values of the Society strengthens child development research as a whole. In emphasizing these values, our science will yield more robust and reliable findings that others can readily build upon and will better serve parents, the public, and policymakers who support and depend upon our work.”

Inefficiencies in scientific communication (Nosek & Bar-Anan, 2012)

- No communication

- Slow communication

- Incomplete communication

- Inaccurate communication

- Unmodifiable communication

Recommendations

- Make the internet the primary means of scientific communication

- Open access to all research

- Disentangle publication from evaluation

- “Grading” papers

- Publishing peer review

- Open continuous peer review

What does your scientific utopia look like?

Gilmore’s…

- All data underlying publications is available for reanalysis/visualization/verification in restricted access data repositories

- All code used to generate displays or run tasks is openly shared

- All measures are openly shared and well-documented

- e.g., your definition of race vs. mine

- Better, more systematic documentation of theoretical commitments, unstated assumptions, actual findings

Learning from cases of misconduct

The Hauser and Stapel cases

Marc Hauser

- Evolutionary/Comparative Psychologist, Professor at Harvard

- Resigned 2011 after internal investigation found him responsible for research misconduct

2012 report by NIH Office of Research Integrity (ORI)

- Fabricated data in Figure 2 of (Hauser, Weiss, & Marcus, 2002); paper retracted.

- Falsified coding in unpublished data.

- Falsely described methodology in manuscript submitted to Cognition, Science, and Nature. Corrected manuscript prior to publication as (Saffran et al., 2008)

- Falsely reported results and methodology for one study in (Hauser, Glynn, & Wood, 2007)

- Made false statement in Methodology section of (Wood, Glynn, Phillips, & Hauser, 2007)

- Engaged in research misconduct by providing inconsistent coding of unpublished data

“Respondent neither admits nor denies committing research misconduct but accepts ORI has found evidence of research misconduct as set forth above and has entered into a Voluntary Settlement Agreement to resolve this matter. The settlement is not an admission of liability on the part of the Respondent.”

https://grants.nih.gov/grants/guide/notice-files/NOT-OD-12-149.html

Hauser’s response

“…Although I have fundamental differences with some of the findings in the ORI report, I acknowledge that I made mistakes. I tried to do too much, teaching courses, running a large lab of students, sitting on several editorial boards, directing the Mind, Brain & Behavior Program at Harvard, conducting multiple research collaborations, and writing for the general public. I let important details get away from my control, and as head of the lab, I take responsibility for all errors made within the lab, whether or not I was directly involved…”

“I am saddened that this investigation has caused some to question all of my work, rather than the few papers and unpublished studies in question. Before, during and after the investigation, many of my lab’s research findings were replicated by independent researchers…”

Diederik Stapel

- Dean of the School of Social and Behavioral Sciences at Tilburg University

- teacher of Scientific Ethics course

- Fraud investigation launched when 3 grad students noticed anomalies – duplicate entries in data tables

- Stapel confessed, lost position, gave up Ph.D., wrote a book

Flawed science: The fraudulent research practices of social psychologist Diederik Stapel

- “…found to have committed a serious infringement of scientific integrity by using fictitious data in his publications, while presenting the data as the output of empirical research.”

- Previous suspicions/concerns about data “too good to be true” were ignored.

- Fraud established in 55 publications.

How did it happen?

- “admitted to having committed fraud in much of his research. He has also conceded that he has been sloppy, kept inadequate records and presented data in the best light possible”

- “…No explicit attention was paid to the combating or prevention of scientific misconduct within the scientific research environment in the Netherlands, and there was certainly no formal Code for research integrity…”

- “…Whatever measures existed were more implicit, and any action was at the discretion of individual researchers….”

- “…no culture of transparency, information sharing, peer review and joint responsibility in this Faculty. It was easy for researchers to go their own way…”

- “…[researchers] did not share their data with other members of the various research groups, and there was no peer review…”

- “People trusted each other’s scientific integrity: no feedback, no warnings, no correction.”

Flawed science: The fraudulent research practices of social psychologist Diederik Stapel

Recommendations

- Avoid verification biases (pp. 48-50)

- Provide complete information about methodology, sufficient to permit replication

- Avoid statistical errors

- If it’s “too good to be true”, it probably isn’t.

- Journals should avoid bias toward “elegant, concise, attractive findings”

- “The data on which an experimental psychology doctoral dissertation is based must as a rule be collected and analysed by the PhD students themselves.”

What do you take from these cases?

Is there a crisis of reproducibility?

The Reproducibility Project

(Collaboration, 2015)

- 39/98 (39.7%) replication attempts were successful

- 97% of original studies reported statistically significant results vs. 36% of replications

This may not render if you are not behind the PSU VPN

So, did the studies replicate?

- (Gilbert, King, Pettigrew, & Wilson, 2016)

- Sampling error differences predicts < 100% reproducibility

- Samples !=

- Only 69% of original PIs “endorsed” replication protocol. Replication rate 4x higher (59.7% vs. 15.4%) in studies with endorsed protocol.

- CI of expected effect sizes given sample/methodological variability? Many Labs project

- (Collaboration, 2015) “…seriously underestimated reproducibility of psychological science.”

Issues

- Kudos to (Collaboration, 2015) and (Gilbert et al., 2016) for addressing these issues openly

- Data from (Collaboration, 2015)

- Data from (Gilbert et al., 2016)

- Reproducibility of “psychological science” vs. a specific finding

- What is the true effect size of a particular manipulation?

- Domain-specific differences in/challenges to reproducibility

- Possible confusion about types of reproducibility

Differences across scientific domains (Goodman, Fanelli, & Ioannidis, 2016)

- Degree of determinism

- Signal to measurement-error ratio

- Complexity of designs and measurement tools

- Closeness of fit between hypothesis and experimental design or data

- Statistical or analytic methods to test hypotheses

- Typical heterogeneity of experimental results

- Culture of replication, transparency, and cumulating knowledge

- Statistical criteria for truth claims

- Purposes to which findings will be put and consequences of false conclusions

What does research reproducibility mean?

- Methods

- Results

- Inferential

What does research reproducibility mean?

- Methods reproducibility

“…the ability to implement, as exactly as possible, the experimental and computational procedures, with the same data and tools, to obtain the same results.”

What does research reproducibility mean?

- Results reproducibility

“(previously described as replicability) refers to obtaining the same results from the conduct of an independent study whose procedures are as closely matched to the original experiment as possible.”

What does research reproducibility mean?

- Inferential reproducibility

“…refers to the drawing of qualitatively similar conclusions from either an independent replication of a study or a reanalysis of the original study”

Is there a reproducibility crisis in science?

- Yes, a significant crisis

- Yes, a slight crisis

- No crisis

- Don’t know

Have you failed to reproduce an analysis from your lab or someone else’s?

Does this surprise you? Why or why not?

Next time

- Making research more reproducible

- Reproducible workflows

Resources

Software

This talk was produced on 2020-02-27 in RStudio using R Markdown. The code and materials used to generate the slides may be found at https://github.com/psu-psychology/psy-525-reproducible-research-2020. Information about the R Session that produced the code is as follows:

## R version 3.6.2 (2019-12-12) ## Platform: x86_64-apple-darwin15.6.0 (64-bit) ## Running under: macOS Mojave 10.14.6 ## ## Matrix products: default ## BLAS: /System/Library/Frameworks/Accelerate.framework/Versions/A/Frameworks/vecLib.framework/Versions/A/libBLAS.dylib ## LAPACK: /Library/Frameworks/R.framework/Versions/3.6/Resources/lib/libRlapack.dylib ## ## locale: ## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8 ## ## attached base packages: ## [1] stats graphics grDevices utils datasets ## [6] methods base ## ## other attached packages: ## [1] seriation_1.2-8 ## ## loaded via a namespace (and not attached): ## [1] Rcpp_1.0.3 highr_0.8 ## [3] compiler_3.6.2 pillar_1.4.3 ## [5] viridis_0.5.1 bitops_1.0-6 ## [7] iterators_1.0.12 tools_3.6.2 ## [9] dendextend_1.13.3 digest_0.6.23 ## [11] packrat_0.5.0 viridisLite_0.3.0 ## [13] evaluate_0.14 lifecycle_0.1.0 ## [15] tibble_2.1.3 gtable_0.3.0 ## [17] pkgconfig_2.0.3 rlang_0.4.4 ## [19] foreach_1.4.8 cli_2.0.1 ## [21] registry_0.5-1 rstudioapi_0.10 ## [23] yaml_2.2.0 xfun_0.12 ## [25] TSP_1.1-8 gridExtra_2.3 ## [27] stringr_1.4.0 pwr_1.2-2 ## [29] dplyr_0.8.3 cluster_2.1.0 ## [31] knitr_1.27 vctrs_0.2.2 ## [33] gtools_3.8.1 caTools_1.18.0 ## [35] tidyselect_1.0.0 grid_3.6.2 ## [37] glue_1.3.1 R6_2.4.1 ## [39] fansi_0.4.1 rmarkdown_2.1 ## [41] gdata_2.18.0 purrr_0.3.3 ## [43] magrittr_1.5 ggplot2_3.2.1 ## [45] gplots_3.0.1.2 scales_1.1.0 ## [47] codetools_0.2-16 gclus_1.3.2 ## [49] htmltools_0.4.0 MASS_7.3-51.5 ## [51] assertthat_0.2.1 colorspace_1.4-1 ## [53] utf8_1.1.4 KernSmooth_2.23-16 ## [55] stringi_1.4.5 lazyeval_0.2.2 ## [57] munsell_0.5.0 crayon_1.3.4

References

Baker, M. (2015). Over half of psychology studies fail reproducibility test. Nature News. https://doi.org/10.1038/nature.2015.18248

Brakewood, B., & Poldrack, R. A. (2013). The ethics of secondary data analysis: Considering the application of belmont principles to the sharing of neuroimaging data. NeuroImage, 82, 671–676. https://doi.org/10.1016/j.neuroimage.2013.02.040

Collaboration, O. S. (2015). Estimating the reproducibility of psychological. Science, 349(6251), aac4716. https://doi.org/10.1126/science.aac4716

Gilbert, D. T., King, G., Pettigrew, S., & Wilson, T. D. (2016). Comment on “Estimating the reproducibility of psychological science”. Science, 351(6277), 1037–1037. https://doi.org/10.1126/science.aad7243

Goodman, S. N., Fanelli, D., & Ioannidis, J. P. A. (2016). What does research reproducibility mean? Science Translational Medicine, 8(341), 341ps12–341ps12. https://doi.org/10.1126/scitranslmed.aaf5027

Hauser, M. D., Glynn, D., & Wood, J. (2007). Rhesus monkeys correctly read the goal-relevant gestures of a human agent. Proceedings of the Royal Society of London B: Biological Sciences, 274(1620), 1913–1918. https://doi.org/10.1098/rspb.2007.0586

Hauser, M. D., Weiss, D., & Marcus, G. (2002). RETRACTED: Rule learning by cotton-top tamarins. Cognition, 86(1), B15–B22. https://doi.org/10.1016/S0010-0277(02)00139-7

Kim, S. Y., & Kim, Y. (2018). The ethos of science and its correlates: An empirical analysis of scientists’ endorsement of mertonian norms. Science, Technology and Society, 23(1), 1–24. https://doi.org/10.1177/0971721817744438

Mischel, W. (2011). Becoming a cumulative science. APS Observer, 22(1). Retrieved from https://www.psychologicalscience.org/observer/becoming-a-cumulative-science

Nosek, B. A., & Bar-Anan, Y. (2012). Scientific utopia i: Opening scientific communication. Psychological Inquiry, 23(3), 217–243. https://doi.org/10.1080/1047840X.2012.692215

Saffran, J., Hauser, M., Seibel, R., Kapfhamer, J., Tsao, F., & Cushman, F. (2008). Grammatical pattern learning by human infants and cotton-top tamarin monkeys. Cognition, 107(2), 479–500. https://doi.org/10.1016/j.cognition.2007.10.010

SRCD. (2019). Policy on scientific integrity, transparency, and openness | society for research in child development SRCD. https://www.srcd.org/policy-scientific-integrity-transparency-and-openness. Retrieved from https://www.srcd.org/policy-scientific-integrity-transparency-and-openness

Wood, J. N., Glynn, D. D., Phillips, B. C., & Hauser, M. D. (2007). The Perception of Rational, Goal-Directed Action in Nonhuman Primates. Science, 317(5843), 1402–1405. https://doi.org/10.1126/science.1144663

![[[@Kim2018-tr]](https://doi.org/10.1177%2F0971721817744438)](https://journals.sagepub.com/na101/home/literatum/publisher/sage/journals/content/stsa/2018/stsa_23_1/0971721817744438/20180208/images/large/10.1177_0971721817744438-table1.jpeg)

![[[@Kim2018-tr]](https://doi.org/10.1177%2F0971721817744438)](https://journals.sagepub.com/na101/home/literatum/publisher/sage/journals/content/stsa/2018/stsa_23_1/0971721817744438/20180208/images/medium/10.1177_0971721817744438-fig1.gif)

![[[@collaboration_estimating_2015]](https://doi.org/10.1126/science.aac4716)](https://science-sciencemag-org.ezaccess.libraries.psu.edu/content/sci/349/6251/aac4716/F1.large.jpg)

![[[@collaboration_estimating_2015]](https://doi.org/10.1126/science.aac4716)](https://science-sciencemag-org.ezaccess.libraries.psu.edu/content/sci/349/6251/aac4716/F2.large.jpg)

![[[@baker_over_2015]](https://doi.org/10.1038/533452a)](https://www.nature.com/polopoly_fs/7.36716.1469695923!/image/reproducibility-graphic-online1.jpeg_gen/derivatives/landscape_630/reproducibility-graphic-online1.jpeg)

![[[@baker_over_2015]](https://doi.org/10.1038/533452a)](https://www.nature.com/polopoly_fs/7.36718.1464174471!/image/reproducibility-graphic-online3.jpg_gen/derivatives/landscape_630/reproducibility-graphic-online3.jpg)

![[[@baker_over_2015]](https://doi.org/10.1038/533452a)](https://www.nature.com/polopoly_fs/7.36719.1464174488!/image/reproducibility-graphic-online4.jpg_gen/derivatives/landscape_630/reproducibility-graphic-online4.jpg)