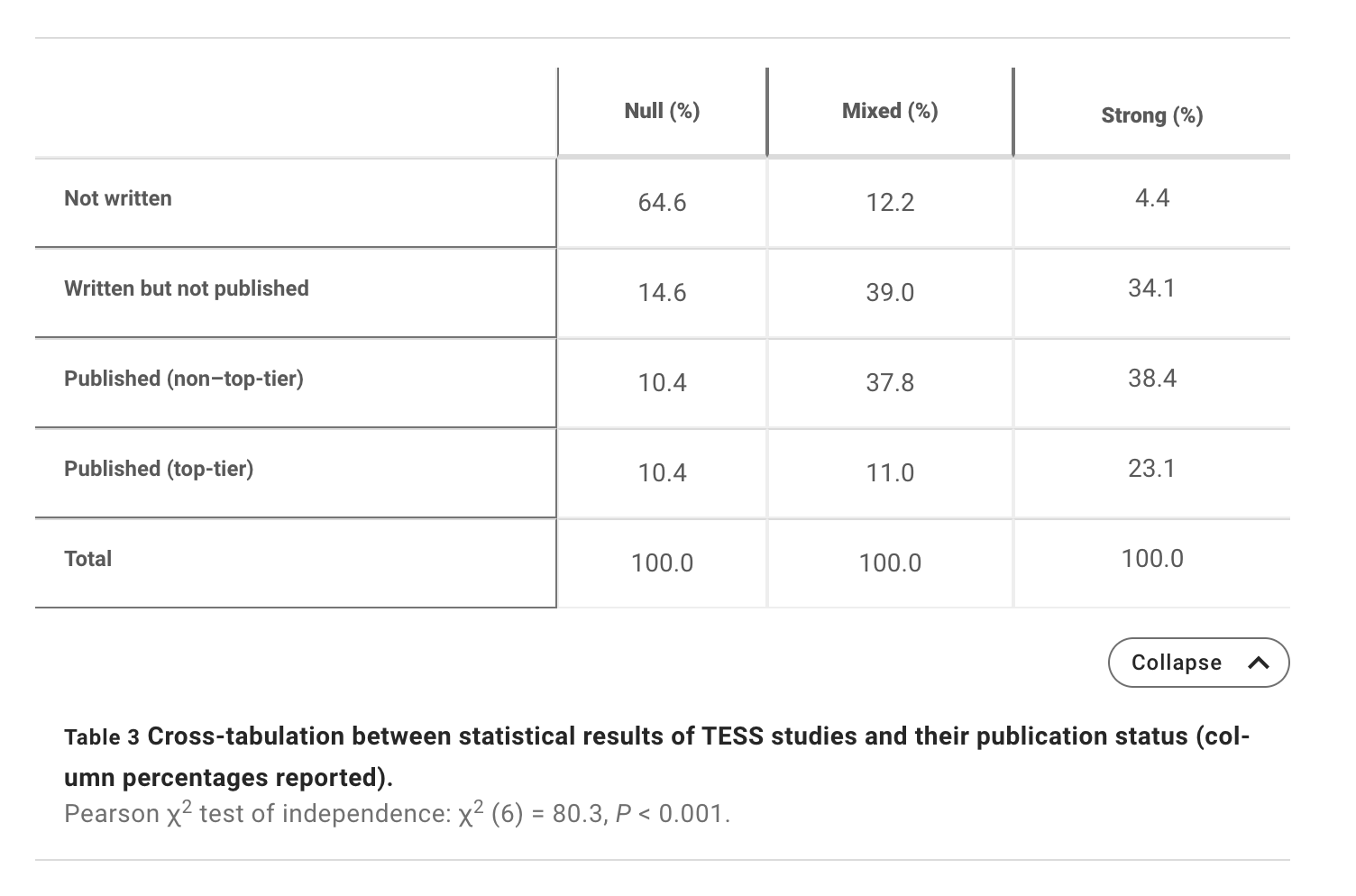

Franco, A., Malhotra, N., & Simonovits, G. (2014a). Social science. Publication bias in the social sciences: Unlocking the file drawer.

Science,

345(6203), 1502–1505.

https://doi.org/10.1126/science.1255484

Franco, A., Malhotra, N., & Simonovits, G. (2014b, August).

FileDrawer.

https://doi.org/10.5281/zenodo.11300

Hypothesis, B. A. S. (2021, February). 13.

“Negative data” and the file drawer problem. Youtube. Retrieved from

https://www.youtube.com/watch?v=9I1qR8PTr54

Nuijten, M. B., Hartgerink, C. H. J., Assen, M. A. L. M. van, Epskamp, S., & Wicherts, J. M. (2015). The prevalence of statistical reporting errors in psychology (1985–2013).

Behavior Research Methods, 1–22.

https://doi.org/10.3758/s13428-015-0664-2

Rosenthal, R. (1979). The file drawer problem and tolerance for null results.

Psychological Bulletin,

86(3), 638–641.

https://doi.org/10.1037/0033-2909.86.3.638

Szucs, D., & Ioannidis, J. P. A. (2017). Empirical assessment of published effect sizes and power in the recent cognitive neuroscience and psychology literature.

PLoS Biology,

15(3), e2000797.

https://doi.org/10.1371/journal.pbio.2000797