Solutions

2024-10-28 Mon

Overview

Announcements

- Final version due

- Due Friday, November 8

Last time…

- How can scientists balance the need to disseminate results to broader audiences with the risks of over-simplifying or conveying misleading information?

Today

Munafò et al. (2017)

Munafò, M. R., Nosek, B. A., Bishop, D. V. M., Button, K. S., Chambers, C. D., Sert, N. P. du, Simonsohn, U., Wagenmakers, E.-J., Ware, J. J. & Ioannidis, J. P. A. (2017). A manifesto for reproducible science. Nature Human Behaviour, 1, 0021. https://doi.org/10.1038/s41562-016-0021

Abstract

Improving the reliability and efficiency of scientific research will increase the credibility of the published scientific literature and accelerate discovery. Here we argue for the adoption of measures to optimize key elements of the scientific process: methods, reporting and dissemination, reproducibility, evaluation and incentives.

Munafò et al. (2017)

There is some evidence from both simulations and empirical studies supporting the likely effectiveness of these measures, but their broad adoption by researchers, institutions, funders and journals will require iterative evaluation and improvement.

Munafò et al. (2017)

We discuss the goals of these measures, and how they can be implemented, in the hope that this will facilitate action toward improving the transparency, reproducibility and efficiency of scientific research.

Munafò et al. (2017)

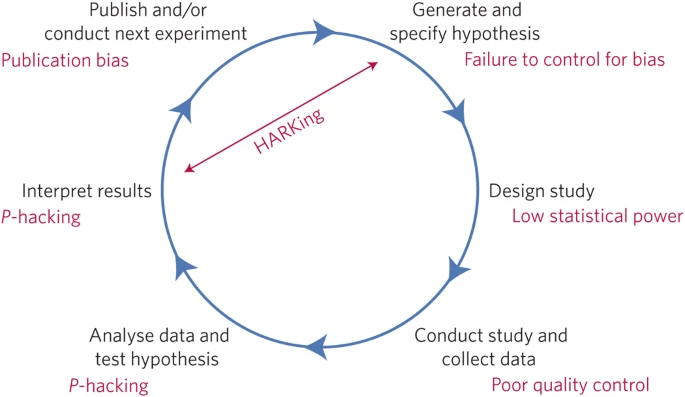

Figure 1 from Munafò et al. (2017)

Table 1

Link: https://www.nature.com/articles/s41562-016-0021/tables/1

Improving methods

Protecting against cognitive biases.

Improving methodological training.

Implementing independent methodological support.

Encouraging collaboration and team science.

Improving reporting and dissemination

Promoting study pre-registration.

Improving the quality of reporting.

Improving reproducibility

Promoting transparency and open science.

Improving evaluation

Diversifying peer review.

Changing incentives

Rewarding open and reproducible practices.

Note

Which of these recommended practices would be at the top of your to-do list if you were a journal editor/publisher, funder, institution (college or university), or regulator (e.g., government entity)?

Which would be at the bottom of your list?

What’s missing from the list?

What can students do?

Begley (2013)

Begley, C. G. (2013). Six red flags for suspect work. Nature, 497(7450), 433–434. https://doi.org/10.1038/497433a

Were experiments performed blinded?

Were basic experiments repeated?

Were all the results presented?

Begley (2013)

Were there positive and negative controls? Often in the non-reproducible, high-profile papers, the crucial control experiments were excluded or mentioned as ‘data not shown’.

Were reagents validated?

Were statistical tests appropriate?

Begley (2013)

Why do we repeatedly see these poor-quality papers in basic science? In part, it is down to the fact that there is no real consequence for investigators or journals. It is also because many busy reviewers (and disappointingly, even co-authors) do not actually read the papers, and because journals are required to fill their pages with simple, complete ‘stories’.

Begley (2013)

And because of the apparent failure to recognize authors’ competing interests — beyond direct financial interests — that may interfere with their judgement.

Begley (2013)

Next time

Changing journal policies

- Final version due

- Read

- Class notes