Reproducible and robust psychological science

Agenda

- Prelude

- Some questions to ponder

- Discuss Munafò et al. 2017

- Discuss Silberzahn et al. 2018

- Discuss Gilmore et al. 2018

- Tools for reproducible science

- An open science future

Questions to ponder

What proportion of findings in the published scientific literature (in the fields you care about) are actually true?

- 100%

- 90%

- 70%

- 50%

- 30%

How do we define what “actually true” means?

Is there a reproducibility crisis in science?

- Yes, a significant crisis

- Yes, a slight crisis

- No crisis

- Don’t know

Have you failed to reproduce an analysis from your lab or someone else’s?

Does this surprise you? Why or why not?

What is reproducibility, anyway?

Methods reproducibility

- Enough details about materials & methods recorded (& reported)

- Same results with same materials & methods

Results reproducibility

- Same results from independent study

Inferential reproducibility

- Same inferences from one or more studies or reanalyses

Is scientific research different from other (flawed) human endeavors?

Robert Merton

Wikipedia

- universalism: scientific validity is independent of sociopolitical status/personal attributes of its participants

- communalism: common ownership of scientific goods (intellectual property)

- disinterestedness: scientific institutions benefit a common scientific enterprise, not specific individuals

- organized skepticism: claims should be exposed to critical scrutiny before being accepted

Are these norms at-risk?

“…psychologists tend to treat other peoples’ theories like toothbrushes; no self-respecting individual wants to use anyone else’s.”

“The toothbrush culture undermines the building of a genuinely cumulative science, encouraging more parallel play and solo game playing, rather than building on each other’s directly relevant best work.”

Munafò et al. 2017

Do these issues affect your research?

Silberzahn et al. 2018

“Twenty-nine teams involving 61 analysts used the same data set to address the same research question: whether soccer referees are more likely to give red cards to dark-skin-toned players than to light-skin-toned players.”

How much did results vary between different teams using the same data to test the same hypothesis?

What were the consequences of this variability in analytic approaches?

Did the analysts’ beliefs regarding the hypothesis change over time?

“Here, we have demonstrated that as a result of researchers’ choices and assumptions during analysis, variation in estimated effect sizes can emerge even when analyses use the same data. ”

“These findings suggest that significant variation in the results of analyses of complex data may be difficult to avoid, even by experts with honest intentions.”

“The best defense against subjectivity in science is to expose it.”

“Transparency in data, methods, and process gives the rest of the community opportunity to see the decisions, question them, offer alternatives, and test these alternatives in further research.”

Do related issues affect your research?

Practical Solutions

What to share

- Data

- Analysis code

- Displays, materials

- Procedure manuals

How to share

- With ethics board/IRB approval

- With participant permission

Where to share data?

- Lab website vs.

- Supplemental information with journal article vs.

- Data repository

When to share

- When you are ready

- Paper goes out for review or is published

- Grant ends

How do these suggestions impact your research?

Tools for reproducible science

What is version control and why use it?

- thesis_new.docx

- thesis_new.new.docx

- thesis_new.new.final.docx

vs.

- thesis_2019-01-15v01.docx

- thesis_2019-01-15v02.docx

- thesis_2019-01-16v01.docx

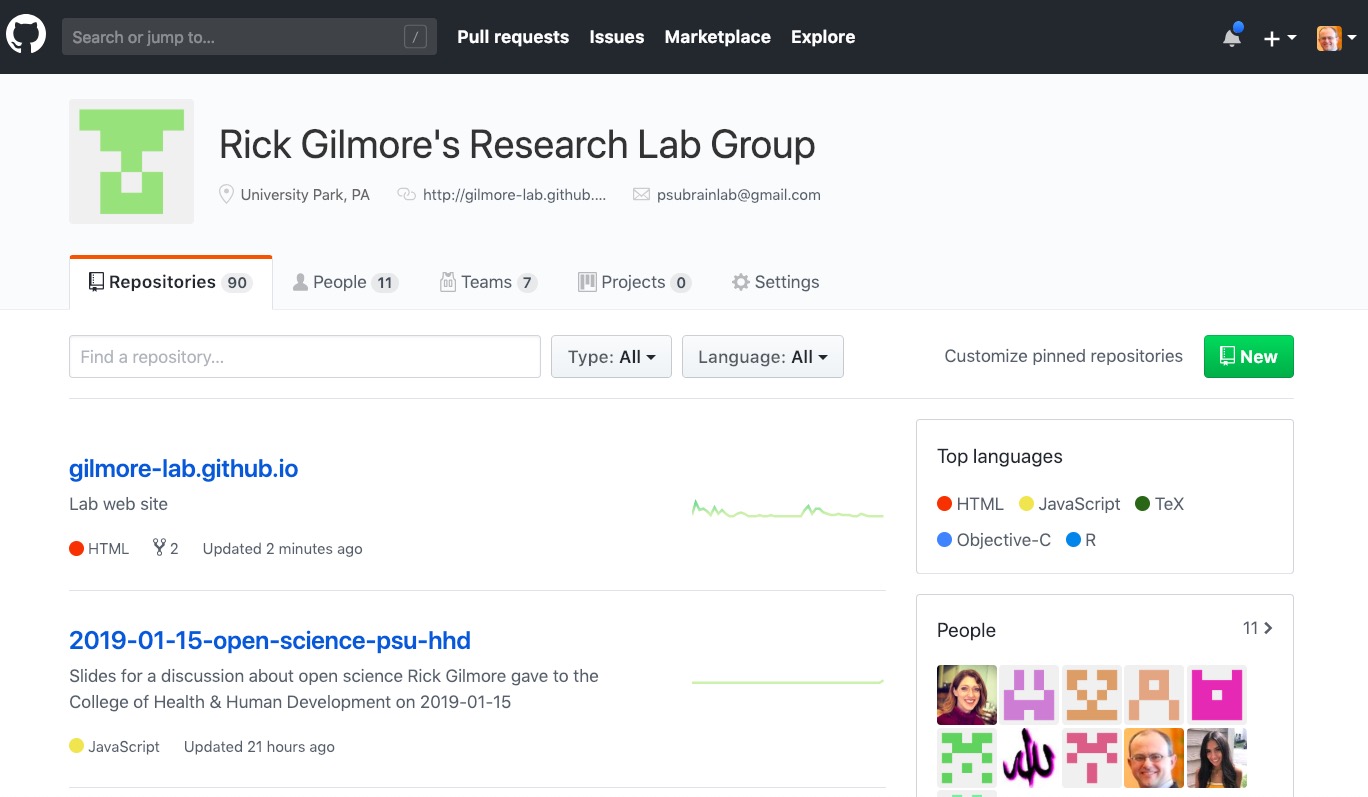

Version control systems

- Used in large-scale software engineering

- svn, bitbucket, git

- GitHub

How I use GitHub

- Every project gets a repository

- Work locally, commit (save & increment version), push to GitHub

- Talks, classes, software, analyses, web sites

FAIR data principles

Data should be…

- Findable

- Accessible

- Interoperable

- Reusable

- Data in interoperable formats (.txt or .csv)

- Scripted, automated = minimize human-dependent steps.

- Well-documented

- Kind to your future (forgetful) self

- Transparent to me & colleagues == transparent to others

Scripted analyses

- SPSS, SAS, R, Python

- Jupyter notebooks

- Rmarkdown via RStudio

# Import/gather data

# Clean data

# Visualize data

# Analyze data

# Report findings# Import data

my_data <- read.csv("path/2/data_file.csv")

# Clean data

my_data$gender <- tolower(my_data$gender) # make lower case

...# Import data

source("R/Import_data.R") # source() runs scripts, loads functions

# Clean data

source("R/Clean_data.R")

# Visualize data

source("R/Visualize_data.R")

...But my SPSS syntax file already does this

- Great! How are you sharing these files?

- (And how much would SPSS cost you if you had to buy it yourself?)

But I prefer {Python, Julia, Ruby, Matlab, …}

- Great! Let’s talk about R Markdown

Reproducible research with R Markdown

- Add-on package to R, developed by the RStudio team

- Combine text, code, images, video, equations into one document

- Render into PDF, MS Word, HTML (web page or site, slides, a blog, or even a book)

- R Markdown documentation; online tutorial; Mike Frank and Chris Hartgerink’s tutorial

- Similar to Mathematica notebooks, Jupyter notebooks

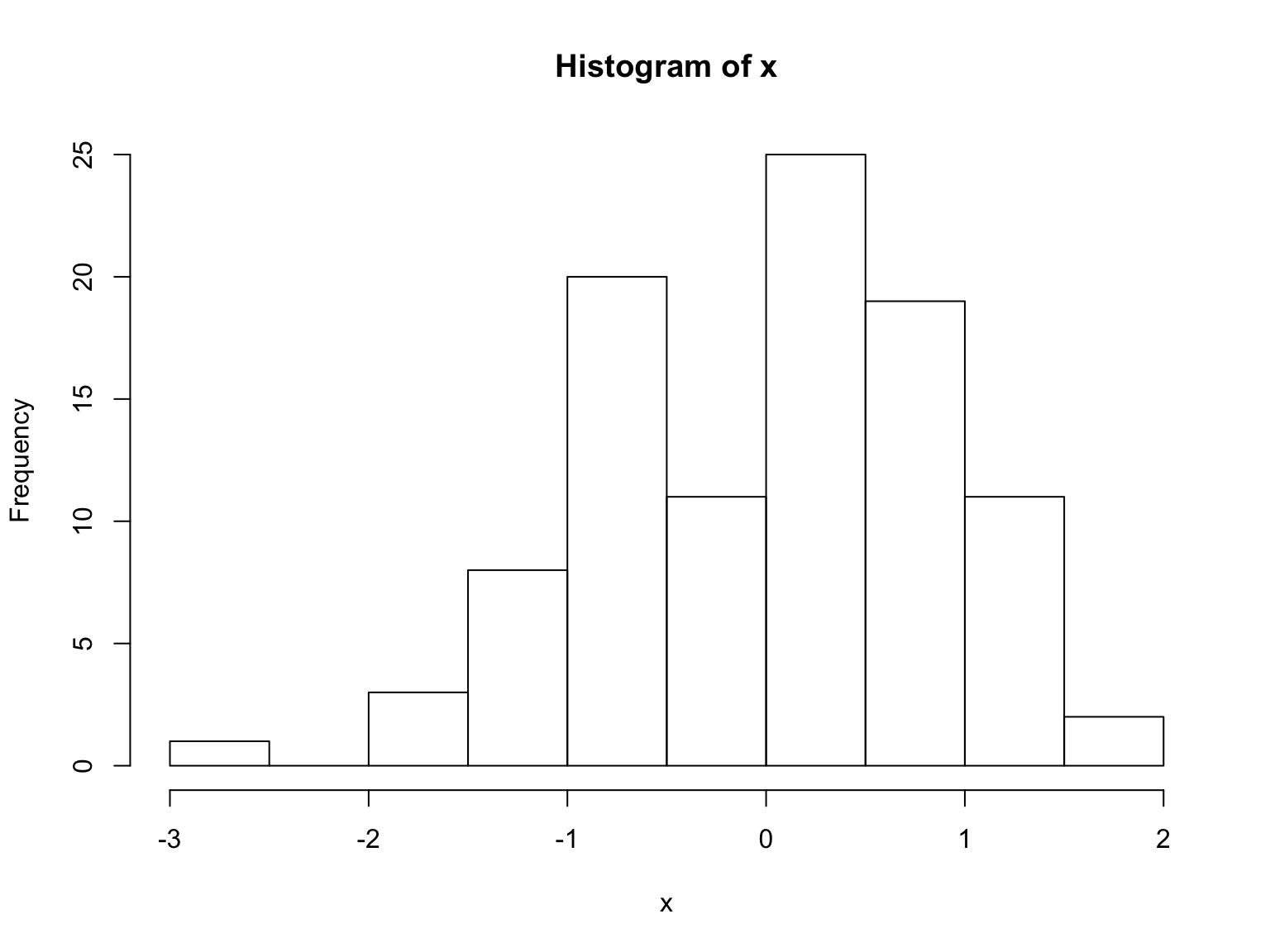

x <- rnorm(n = 100, mean = 0, sd = 1)

hist(x)The mean is 0.0131633, the range is [-2.714349, 1.7930418].

Ways to use R Markdown

Ways to use R Markdown

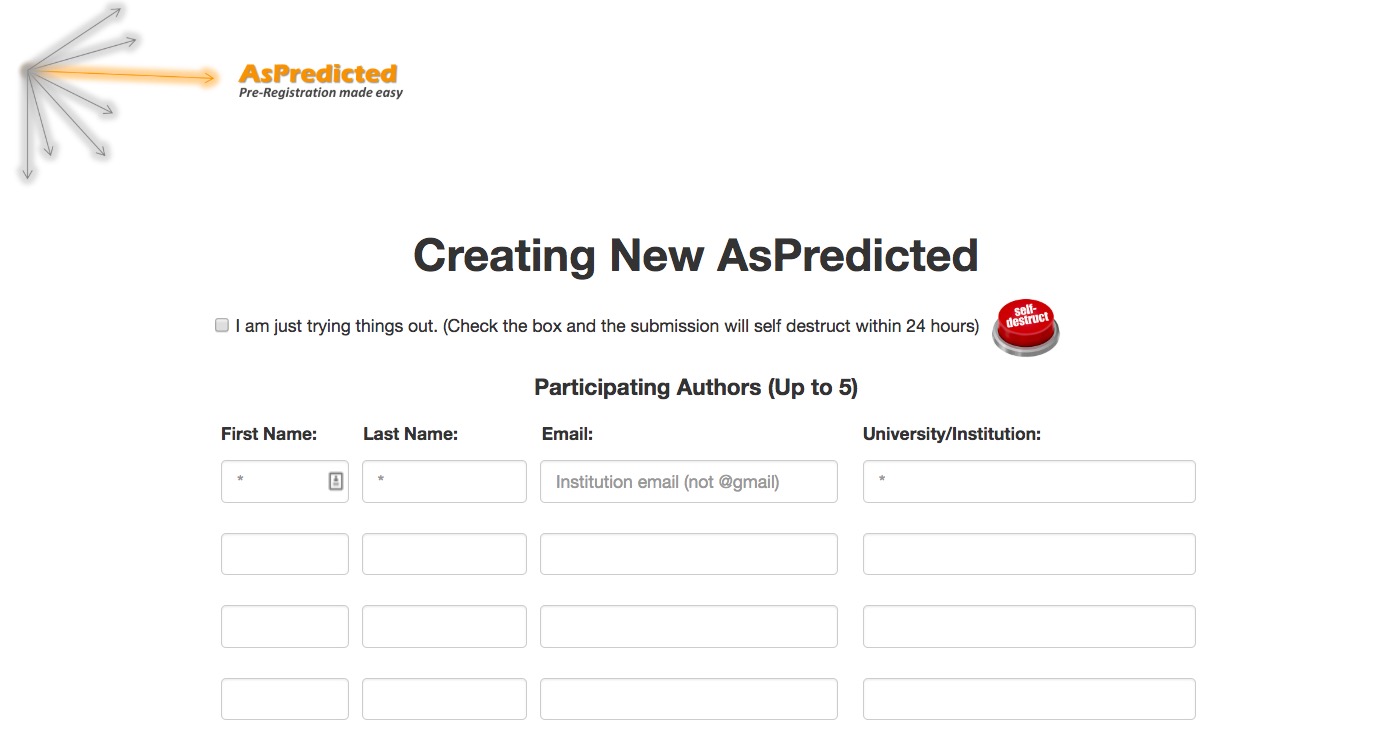

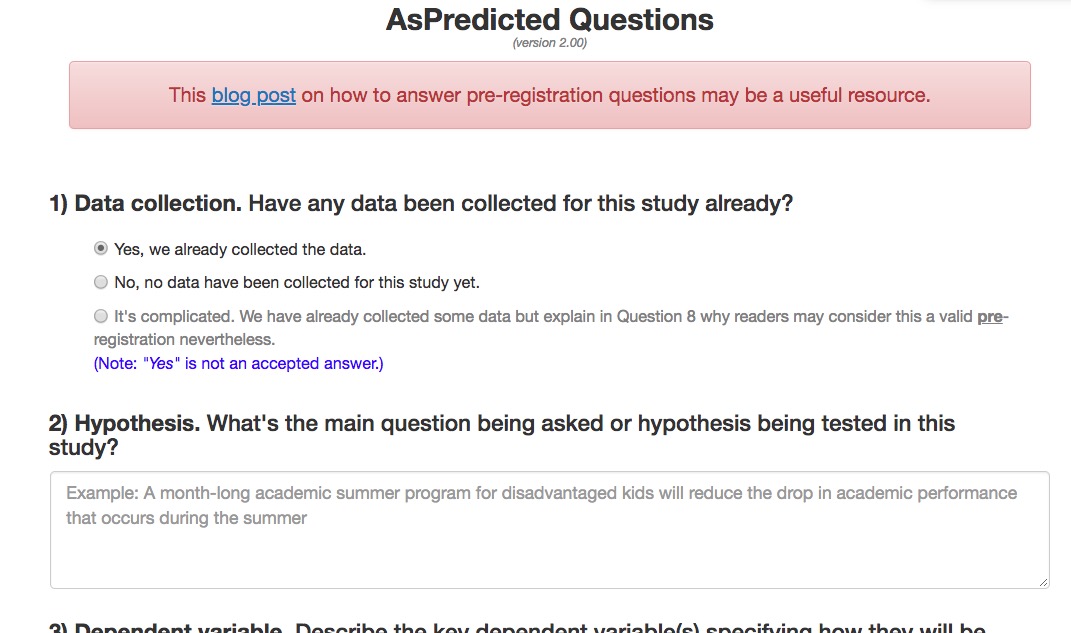

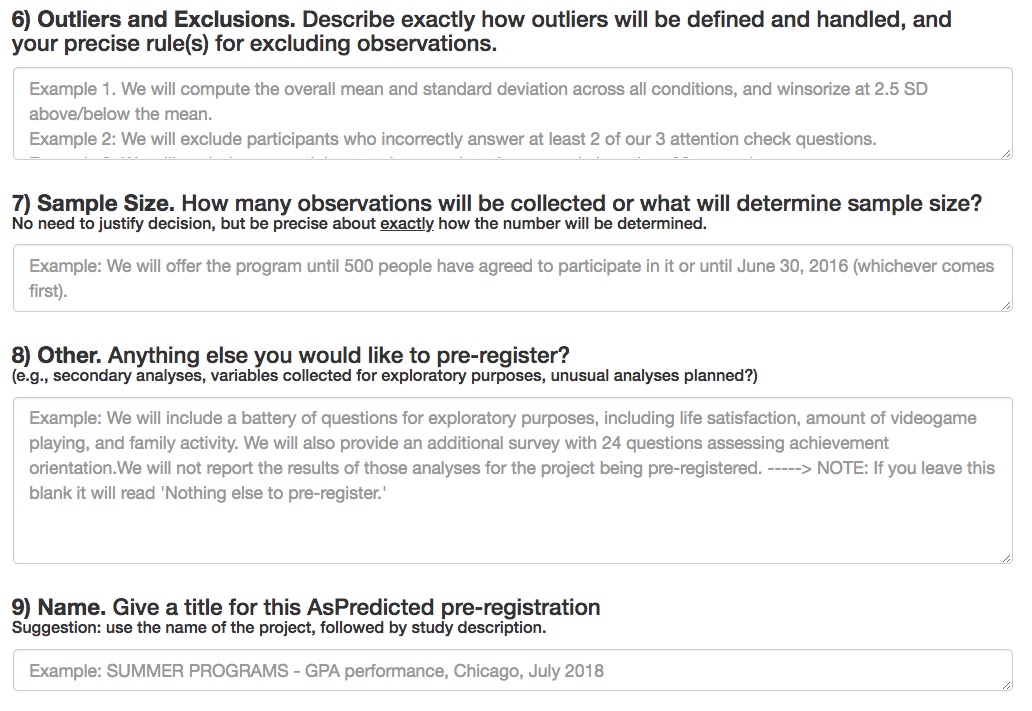

Registered reports and pre-registration

Why preregister?

- Nosek: “Don’t fool yourself”

- Separate confirmatory from exploratory analyses

- Confirmatory (hypothesis-driven): p-hacking matters

- Exploratory: p-values hard(er) to interpret

How/where

Skeptics and converts

- Susan Goldin-Meadow (skeptic), “Why pregistration makes me nervous”

- Mike Frank (former skeptic, now advocate), “Pregister everything”

Large-scale replication studies

Studies are underpowered

“Assuming a realistic range of prior probabilities for null hypotheses, false report probability is likely to exceed 50% for the whole literature.”

Many Labs

Reproducibility Project: Psychology (RPP)

“…The mean effect size (r) of the replication effects…was half the magnitude of the mean effect size of the original effects…”

“…39% of effects were subjectively rated to have replicated the original result…”

If it’s too good to be true, it probably isn’t

An open science future…

“The advancement of detailed and diverse knowledge about the development of the world’s children is essential for improving the health and well-being of humanity…”

SRCD Task Force on Scientific Integrity and Openness

“We regard scientific integrity, transparency, and openness as essential for the conduct of research and its application to practice and policy…”

SRCD Task Force on Scientific Integrity and Openness

“…the principles of human subject research require an analysis of both risks and benefits…such an analysis suggests that researchers may have a positive duty to share data in order to maximize the contribution that individual participants have made.”

https://psu-psychology.github.io/psy-543-clinical-research-methods-2019/

Stack

This talk was produced on 2019-12-03 in RStudio version using R Markdown and the reveal.JS framework. The code and materials used to generate the slides may be found at https://psu-psychology.github.io/psy-543-clinical-research-methods-2019/. Information about the R Session that produced the code is as follows:

## R version 3.5.3 (2019-03-11)

## Platform: x86_64-apple-darwin15.6.0 (64-bit)

## Running under: macOS Mojave 10.14.6

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/3.5/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/3.5/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## loaded via a namespace (and not attached):

## [1] compiler_3.5.3 magrittr_1.5 tools_3.5.3 htmltools_0.3.6

## [5] revealjs_0.9 yaml_2.2.0 Rcpp_1.0.1 stringi_1.4.3

## [9] rmarkdown_1.13 highr_0.8 knitr_1.23 stringr_1.4.0

## [13] xfun_0.8 digest_0.6.19 evaluate_0.14

](http://www.nature.com/polopoly_fs/7.36716.1469695923!/image/reproducibility-graphic-online1.jpeg_gen/derivatives/landscape_630/reproducibility-graphic-online1.jpeg)

](http://www.nature.com/polopoly_fs/7.36718.1464174471!/image/reproducibility-graphic-online3.jpg_gen/derivatives/landscape_630/reproducibility-graphic-online3.jpg)

](http://www.nature.com/polopoly_fs/7.36719.1464174488!/image/reproducibility-graphic-online4.jpg_gen/derivatives/landscape_630/reproducibility-graphic-online4.jpg)

](http://www.nature.com/article-assets/npg/nathumbehav/2017/s41562-016-0021/images_hires/w926/s41562-016-0021-f1.jpg)

](https://journals.sagepub.com/na101/home/literatum/publisher/sage/journals/content/ampa/2018/ampa_1_3/2515245917747646/20181024/images/large/10.1177_2515245917747646-table4.jpeg)

](https://journals.sagepub.com/na101/home/literatum/publisher/sage/journals/content/ampa/2018/ampa_1_3/2515245917747646/20181024/images/medium/10.1177_2515245917747646-fig2.gif)

](https://journals.sagepub.com/na101/home/literatum/publisher/sage/journals/content/ampa/2018/ampa_1_3/2515245917747646/20181024/images/medium/10.1177_2515245917747646-fig4.gif)

](https://econtent.hogrefe.com/na101/home/literatum/publisher/hogrefe/journals/content/zsp/2014/zsp.2014.45.issue-3/1864-9335_a000178/20150727/images/large/zsp_45_3_142_fig1a.jpeg)

](https://mfr.osf.io/export?url=https://osf.io/fg4d3/?action=download%26mode=render%26direct%26public_file=True&initialWidth=698&childId=mfrIframe&parentTitle=OSF+%7C+F1+-+EffectSizes.png&parentUrl=https://osf.io/fg4d3/&format=2400x2400.jpeg)

](https://mfr.osf.io/export?url=https://osf.io/8pc9x/?action=download%26mode=render%26direct%26public_file=True&initialWidth=698&childId=mfrIframe&parentTitle=OSF+%7C+F4+-+PeerBeliefs.png&parentUrl=https://osf.io/8pc9x/&format=2400x2400.jpeg)