2023-09-18 Mon

Replication in cancer biology

Overview

Announcements

- No assignments due this Friday

- Distribute and discuss Exercise 03

- Next week is a short week

- No class on Monday, September 25.

Last time…

- Did the Macbeth effect (Zhong & Liljenquist, 2006) replicate?

- Did the priming effect (Bargh, Chen, & Burrows, 1996) replicate?

- Is this yes/no question the right one to ask?

- If not, what’s a better question to ask?

Today

Replication in cancer biology

- Read & discuss

Important

Why might psychology majors care about replication challenges in a field outside of their own?

Replication in cancer biology

Errington, T. M., Denis, A., Perfito, N., Iorns, E. & Nosek, B. A. (2021). Challenges for assessing replicability in preclinical cancer biology. eLife, 10, e67995. https://doi.org/10.7554/eLife.67995

Tim Errington speaking at the Penn State Open Science Bootcamp 2023

Access

- Available on eLife without restriction

- Paper type: commentary/opinion piece

- Sought to repeat 193 experiments from 53 papers; were only able to repeat 50 experiments from 23 papers

- Had hoped to “[use] an approach in which the experimental protocols and plans for data analysis had to be peer reviewed and accepted for publication before experimental work could begin”, e.g., a registered report.

Abstract

“We conducted the Reproducibility Project: Cancer Biology to investigate the replicability of preclinical research in cancer biology. The initial aim of the project was to repeat 193 experiments from 53 high-impact papers, using an approach in which the experimental protocols and plans for data analysis had to be peer reviewed and accepted for publication before experimental work could begin…”

“However, the various barriers and challenges we encountered while designing and conducting the experiments meant that we were only able to repeat 50 experiments from 23 papers. Here we report these barriers and challenges…”

“First, many original papers failed to report key descriptive and inferential statistics: the data needed to compute effect sizes and conduct power analyses was publicly accessible for just 4 of 193 experiments. Moreover, despite contacting the authors of the original papers, we were unable to obtain these data for 68% of the experiments…”

Note

Effect sizes are measures of how much an intervention changed some outcome or how big a difference exists between groups.

A power analysis is a tool for determining how large a sample should be in order to detect a specific effect with a specified probability. For example, let’s say want to detect some effect say 80%1 of the time, how large a sample do we need?

We will explore these ideas in more detail later in the course, specifically in Exercise 05: Alpha, Power, Effect Sizes, & Sample Size.

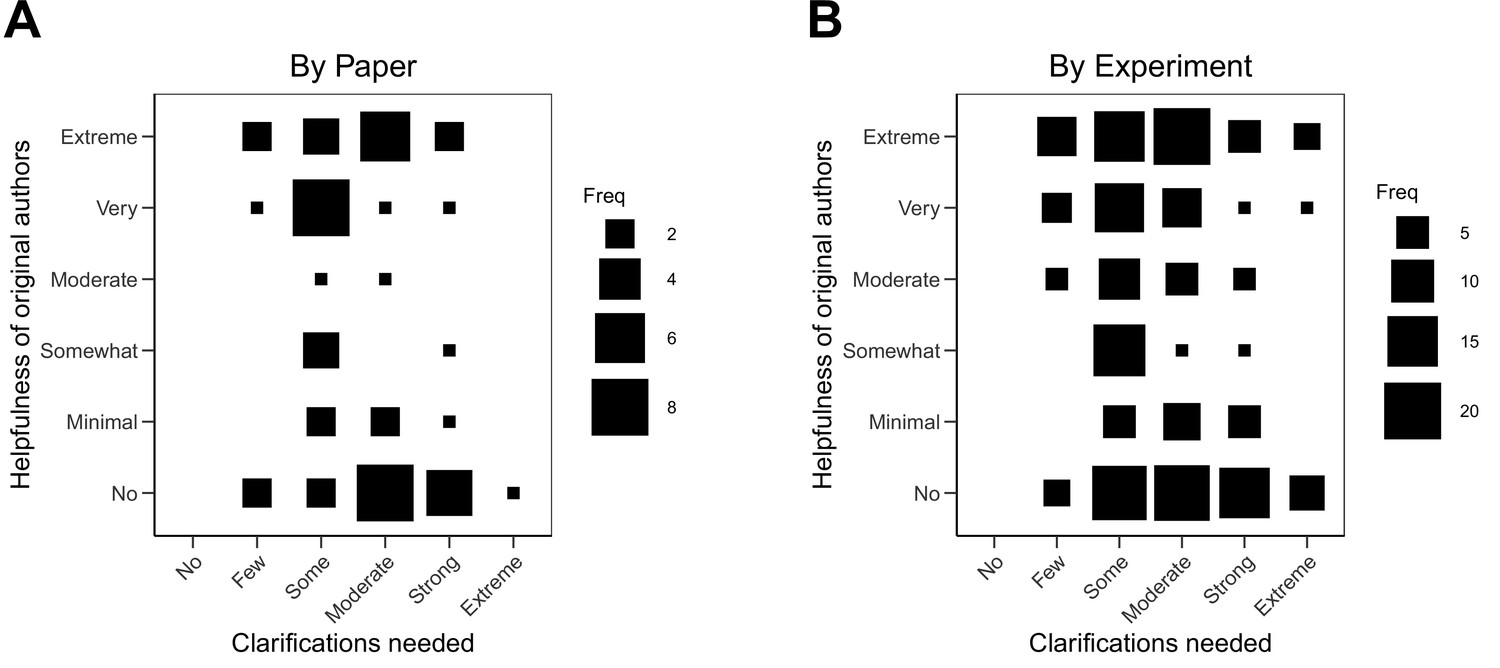

“Second, none of the 193 experiments were described in sufficient detail in the original paper to enable us to design protocols to repeat the experiments, so we had to seek clarifications from the original authors…”

Note

How often do journal articles alone demonstrate methods reproducibility (Goodman, Fanelli, & Ioannidis, 2016)?

Why do you suppose Dr. Gilmore likes to read the methods section first?

Should authors have to publish detailed protocols in alongside their papers?

While authors were extremely or very helpful for 41% of experiments, they were minimally helpful for 9% of experiments, and not at all helpful (or did not respond to us) for 32% of experiments…”

Note

So, \(32+9=41\)% were minimally or not at all helpful.

Do you think that researchers whose studies are funded by taxpayers, e.g., the U.S. National Institutes of Health (NIH), should be required to share data or help replication projects like this?

“Third, once experimental work started, 67% of the peer-reviewed protocols required modifications to complete the research and just 41% of those modifications could be implemented. Cumulatively, these three factors limited the number of experiments that could be repeated…”

“This experience draws attention to a basic and fundamental concern about replication – it is hard to assess whether reported findings are credible.”

“Science is a system for accumulating knowledge. The credibility of knowledge claims relies, in part, on the transparency and repeatability of the evidence used to support them. As a social system, science operates with norms and processes to facilitate the critical appraisal of claims, and transparency and skepticism are virtues endorsed by most scientists (Anderson et al., 2007)…”

“Science is also relatively non-hierarchical in that there are no official arbiters of the truth or falsity of claims. However, the interrogation of new claims and evidence by peers occurs continuously, and most formally in the peer review of manuscripts prior to publication. Once new claims are made public, other scientists may question, challenge, or extend them by trying to replicate the evidence or to conduct novel research…”

“The evaluative processes of peer review and replication are the basis for believing that science is self-correcting. Self-correction is necessary because mistakes and false starts are expected when pushing the boundaries of knowledge. Science works because it efficiently identifies those false starts and redirects resources to new possibilities…”

We believe everything we wrote in the previous paragraph except for one word in the last sentence – efficiently. Science advances knowledge and is self-correcting, but we do not believe it is doing so very efficiently. Many parts of research could improve to accelerate discovery.”

Fig 1 from (Errington, Denis, et al., 2021)

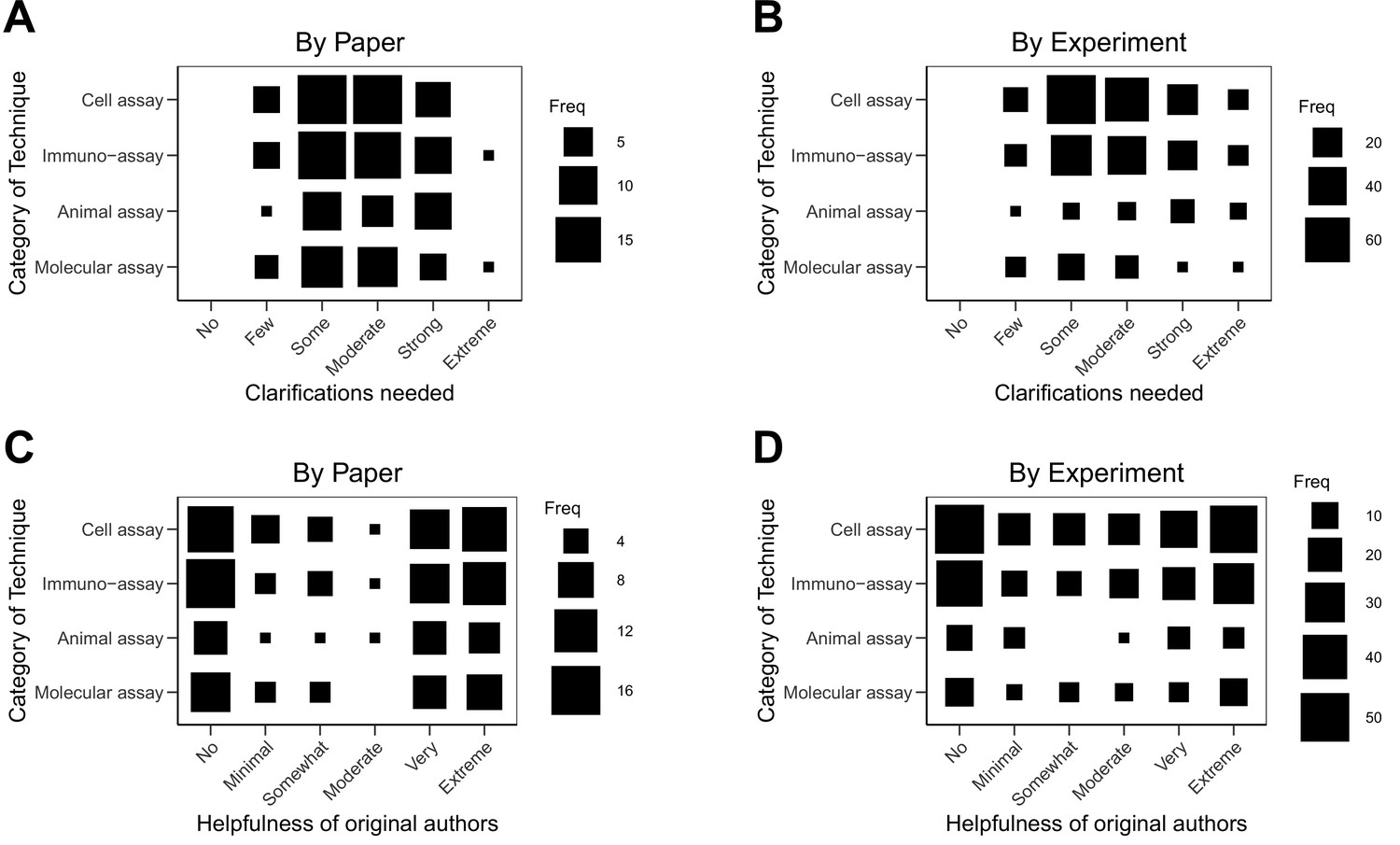

Fig 1 (suppl) from (Errington, Denis, et al., 2021)

Fig 2 from (Errington, Denis, et al., 2021)

Fig 2 (suppl 2) from Errington, Denis, et al. (2021)

Replication/Transparency notes

- Pre-registered

- All data shared on Open Science Framework

- Most of the results can be gleaned from the figures

Errington, T. M., Mathur, M., Soderberg, C. K., Denis, A., Perfito, N., Iorns, E. & Nosek, B. A. (2021). Investigating the replicability of preclinical cancer biology. eLife, 10, e71601. https://doi.org/10.7554/eLife.71601.

Access

- Available on eLife without restriction

Abstract

“Replicability is an important feature of scientific research, but aspects of contemporary research culture, such as an emphasis on novelty, can make replicability seem less important than it should be. The Reproducibility Project: Cancer Biology was set up to provide evidence about the replicability of preclinical research in cancer biology by repeating selected experiments from high-impact papers…”

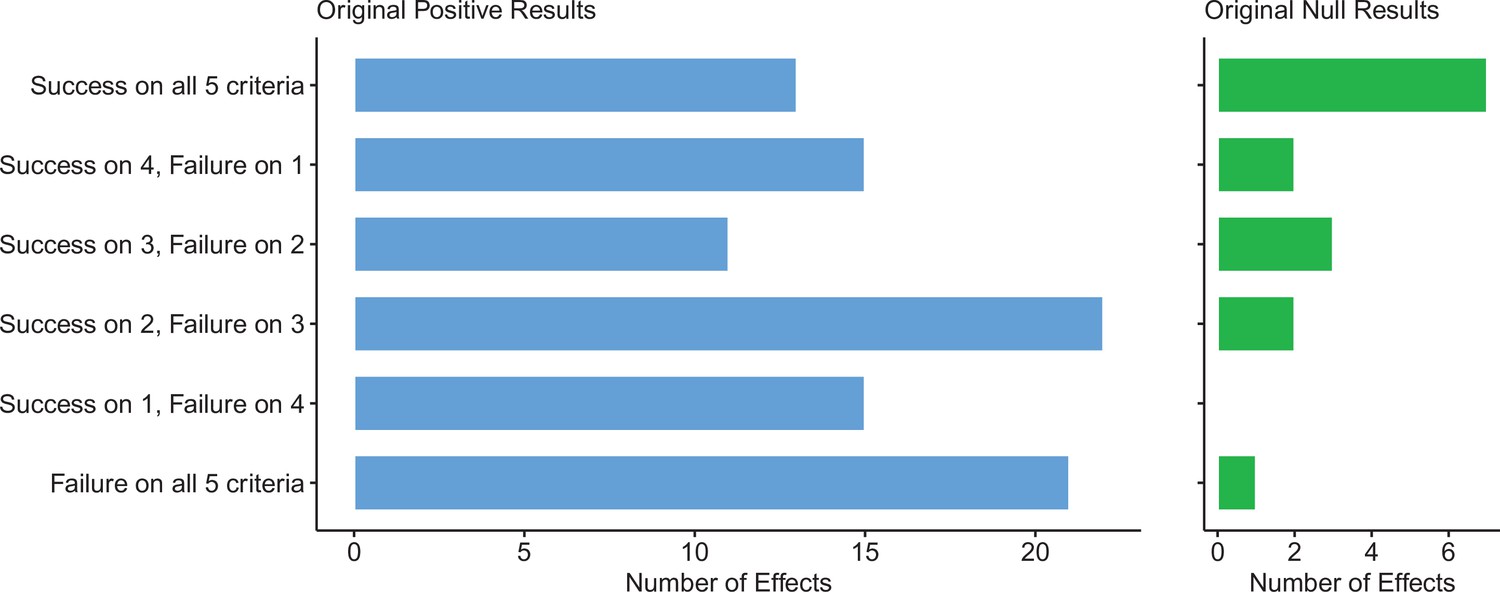

“A total of 50 experiments from 23 papers were repeated, generating data about the replicability of a total of 158 effects. Most of the original effects were positive effects (136), with the rest being null effects (22). A majority of the original effect sizes were reported as numerical values (117), with the rest being reported as representative images (41)…”

“We employed seven methods to assess replicability, and some of these methods were not suitable for all the effects in our sample. One method compared effect sizes: for positive effects, the median effect size in the replications was 85% smaller than the median effect size in the original experiments, and 92% of replication effect sizes were smaller than the original…”

“The other methods were binary – the replication was either a success or a failure – and five of these methods could be used to assess both positive and null effects when effect sizes were reported as numerical values. For positive effects, 40% of replications (39/97) succeeded according to three or more of these five methods, and for null effects 80% of replications (12/15) were successful on this basis; combining positive and null effects, the success rate was 46% (51/112)…”

“A successful replication does not definitively confirm an original finding or its theoretical interpretation. Equally, a failure to replicate does not disconfirm a finding, but it does suggest that additional investigation is needed to establish its reliability.”

- Type of paper: Empirical (replication)

- Who/what was sample: \(n=50\) experiments from \(n=23\) papers with \(n=158\) effects

Measures of replication

- Numerical results (difference = “positive” effect or no difference = “null” effect)

- Same direction as original

- Same direction and statistically significant

- Original effect size (ES) in confidence interval (CI) for replication

- Replication ES in original CI

Fig. 6 from (Errington, Denis, et al., 2021)

“We used seven criteria to assess the replicability of 158 effects in a selection of 23 papers reporting the results of preclinical research in cancer biology. Across multiple criteria, the replications provided weaker evidence for the findings than the original papers. For original positive effects that were reported as numerical values, the median effect size for the replications was 0.43, which was 85% smaller than the median of the original effect sizes (2.96)…”

“And although 79% of the replication effects were in the same direction as the original finding (random would be 50%), 92% of replication effect sizes were smaller than the original (combining numeric and representative images).”

Note

A consistent finding emerging from replication studies across fields is that replication effect sizes are often smaller than the original.

Why do you suppose this is so?

What does this mean for ‘consumers’ of science?

“A single failure to replicate a finding does not render a verdict on its replicability or credibility. A failure to replicate could occur because the original finding was a false positive.”

“A failure to replicate could also occur because the replication was a false negative. This can occur if the replication was underpowered or the design or execution was flawed. Such failures are uninteresting but important.”

“Successfully replicating a finding also does not render a verdict on its credibility. Successful replication increases confidence that the finding is repeatable, but it is mute to its meaning and validity. For example, if the finding is a result of unrecognized confounding influences or invalid measures, then the interpretation may be wrong even if it is easily replicated.”

“…Also, the interpretation of a finding may be much more general than is justified by the evidence. The particular experimental paradigm may elicit highly replicable findings, but also apply only to very specific circumstances that are much more circumscribed than the interpretation.”

“After conducting dozens of replications, we can declare definitive understanding of precisely zero of the original findings. That may seem a dispiriting conclusion from such an intense effort, but it is the reality of doing research. Original findings provided initial evidence, replications provide additional evidence.”

“Sometimes the replications increased confidence in the original findings, sometimes they decreased confidence. In all cases, we now have more information than we had. In no cases, do we have all the information that we need. Science makes progress by progressively identifying error and reducing uncertainty.”

“Replication actively confronts current understanding, sometimes with affirmation, other times signaling caution and a call to investigate further. In science, that’s progress.”

Next time

What R we talking about?

- Read

- (Fidler & Wilcox, 2021)

- (Skim) (Nosek et al., 2022) and (Goodman et al., 2016)

- Class notes

Resources

References

PSYCH 490.009: 2023-09-18 Monday