Prevalence of QRPs

2024-10-16 Wed

Thought questions

- What are we learning in this course?

- Who should care about the prevalence of questionable research practices?

- Why should they care?

Overview

Announcements

- Due Friday

- Final project proposal

How’s it going?

Don Ridgway 1928-2018

https://colostudentmedia.com/featured-news/2018/08/19/father-of-scholastic-journalism-in-colorado-dies-at-age-90/

What are you supposed to be learning?

Last time…

- Wrap-up on p-hacking

- How’d we do?

Today

Prevalence of QRPs

- Read & Discuss

- John, Loewenstein, & Prelec (2012)

- Discuss results from

How prevalent are QRPs?

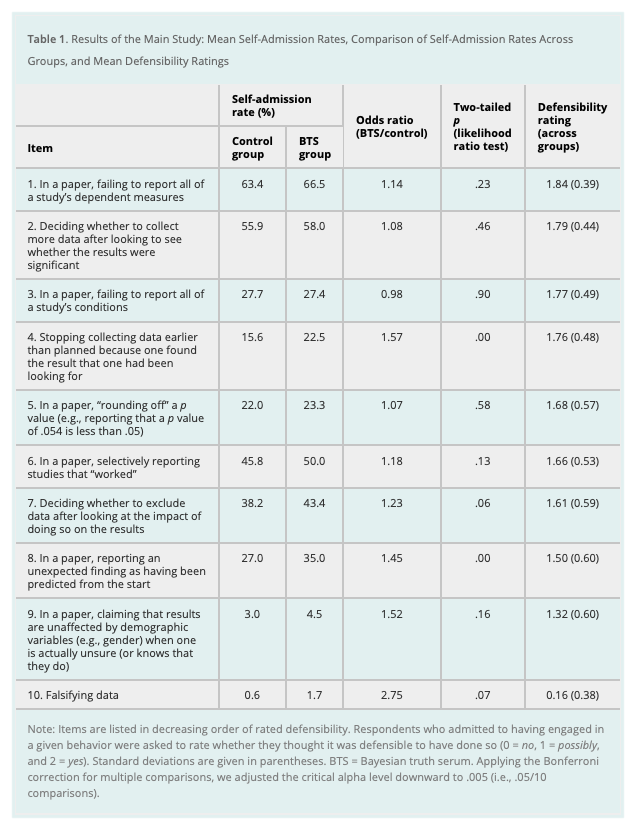

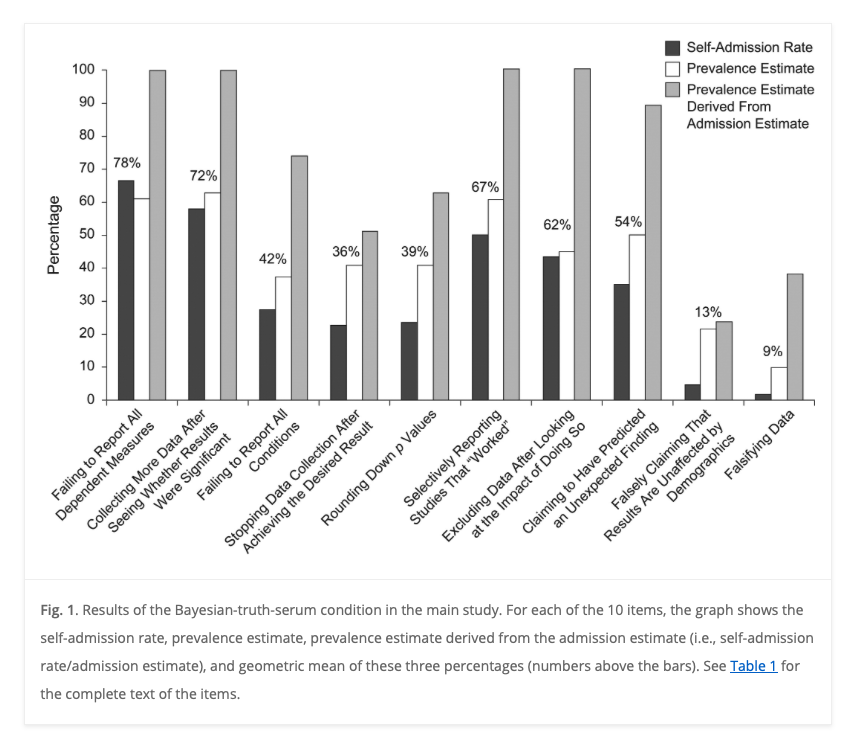

John et al. (2012)

Cases of clear scientific misconduct have received significant media attention recently, but less flagrantly questionable research practices may be more prevalent and, ultimately, more damaging to the academic enterprise. Using an anonymous elicitation format supplemented by incentives for honest reporting, we surveyed over 2,000 psychologists about their involvement in questionable research practices.

John et al. (2012)

The impact of truth-telling incentives on self-admissions of questionable research practices was positive, and this impact was greater for practices that respondents judged to be less defensible. Combining three different estimation methods, we found that the percentage of respondents who have engaged in questionable practices was surprisingly high. This finding suggests that some questionable practices may constitute the prevailing research norm.

John et al. (2012)

Accessibility/openness notes

- Paper not openly accessible.

- Paper was accessible via authenticated access to PSU library.

The two versions of the survey differed in the incentives they offered to respondents. In the Bayesian-truth-serum (BTS) condition, a scoring algorithm developed by one of the authors (Prelec, 2004) was used to provide incentives for truth telling. This algorithm uses respondents’ answers about their own…

…behavior and their estimates of the sample distribution of answers as inputs in a truth-rewarding scoring formula. Because the survey was anonymous, compensation could not be directly linked to individual scores.

Instead, respondents were told that we would make a donation to a charity of their choice, selected from five options, and that the size of this donation would depend on the truthfulness of their responses, as determined by the BTS scoring system. By inducing a (correct) belief that dishonesty would reduce donations, we hoped to amplify the moral stakes riding on each answer (for details on the donations, see Supplementary Results in the Supplemental Material).

Respondents were not given the details of the scoring system but were told that it was based on an algorithm published in Science and were given a link to the article. There was no deception: Respondents’ BTS scores determined our contributions to the five charities.

Respondents in the control condition were simply told that a charitable donation would be made on behalf of each respondent. (For details on the effect of the size of the incentive on response rates, see Participation Incentive Survey in the Supplemental Material.)

John et al. (2012)

Note

What do you think about the “truth serum” manipulation?

Do the data persuade you that it made respondents more honest?

Reproducibility notes for John et al. (2012)

Supplemental Material

Additional supporting information may be found at http://pss.sagepub.com/content/by/supplemental-data

John et al. (2012)

- I visited this URL (http://pss.sagepub.com/content/by/supplemental-data.

- Then, I went to the journal page and searched for the article title.

- Since the article is behind a paywall, I wasn’t able to access the supplemental materials that way.

- After authenticating to the PSU library, I was able to find a PDF of the supplementary material. It and the original paper are on Canvas.

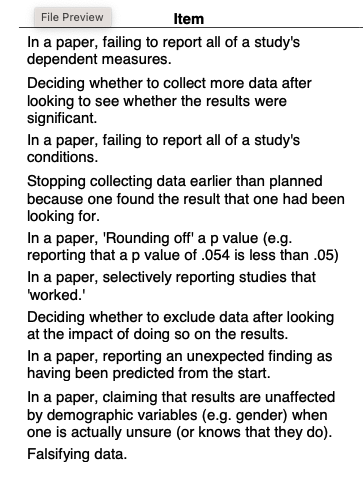

- I was unable to find the raw data, but I found the questions on p. 5 of the supplementary material.

Supplementary material for John et al. (2012)

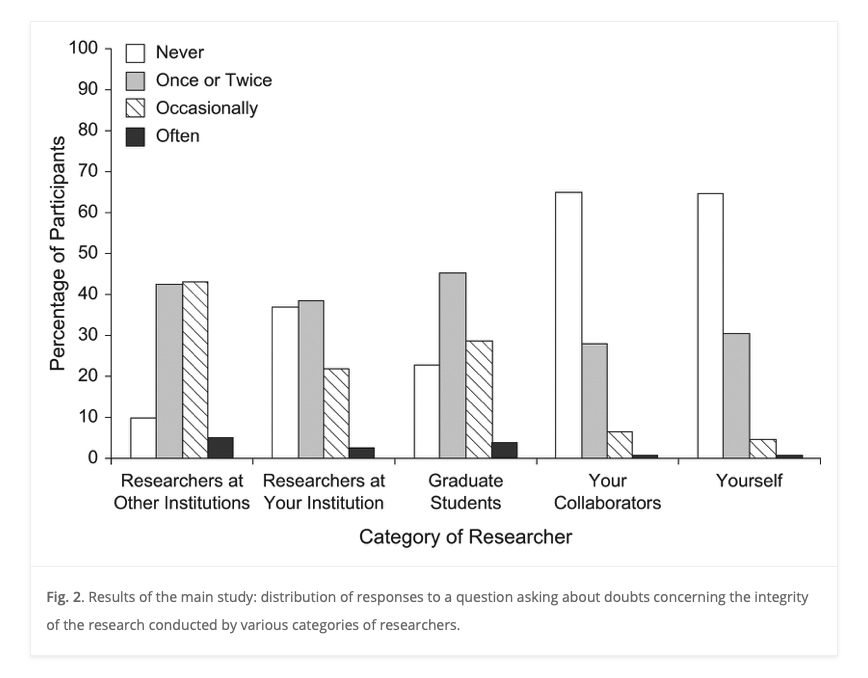

More evidence about prevalance of QRPs

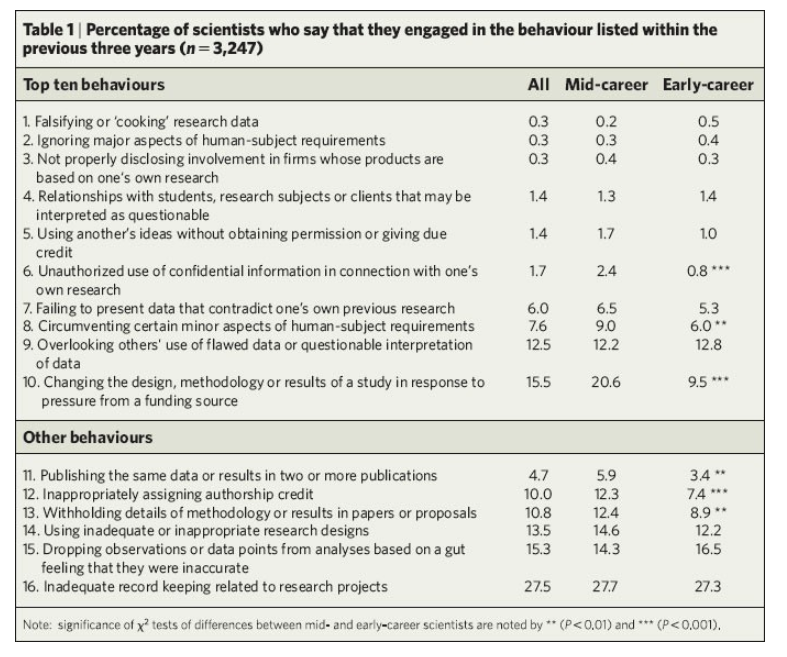

Survey of n=3,247 NIH-funded scientists

Xie, Wang, & Kong (2021)

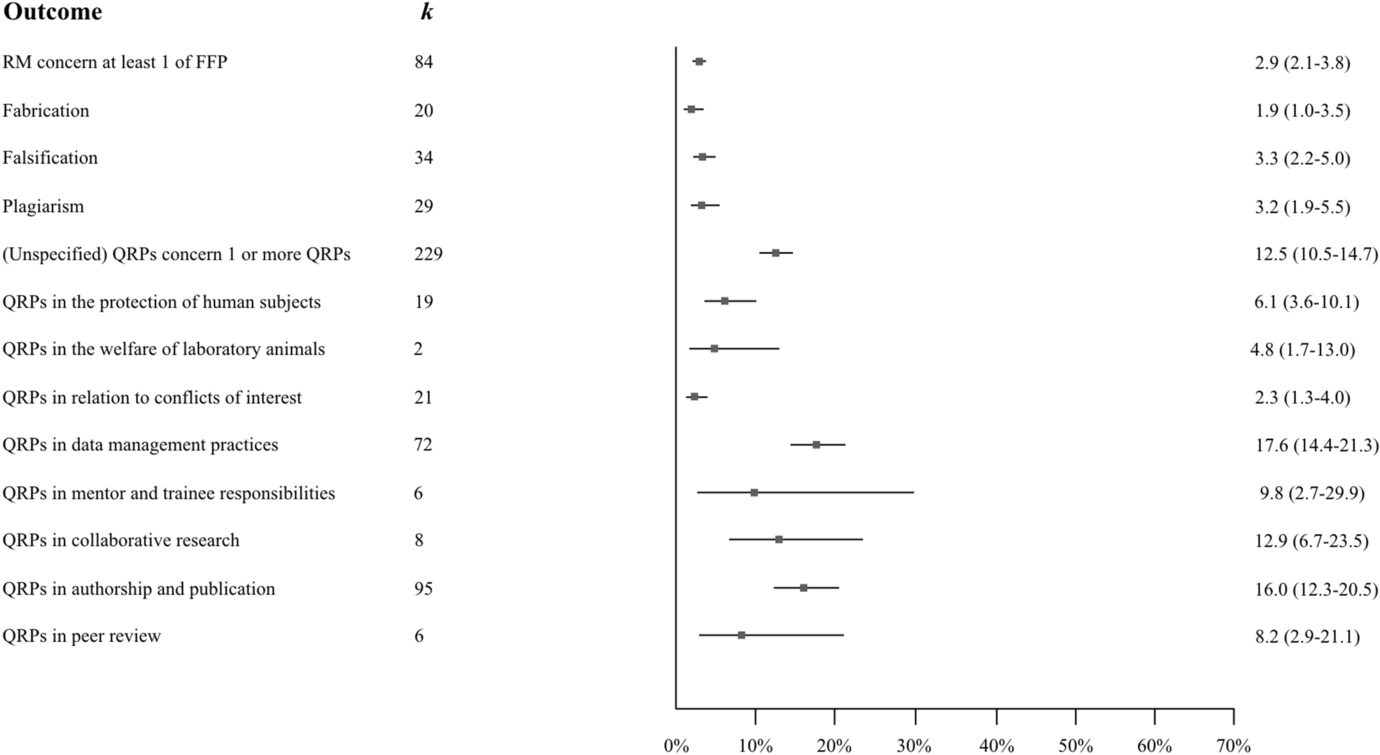

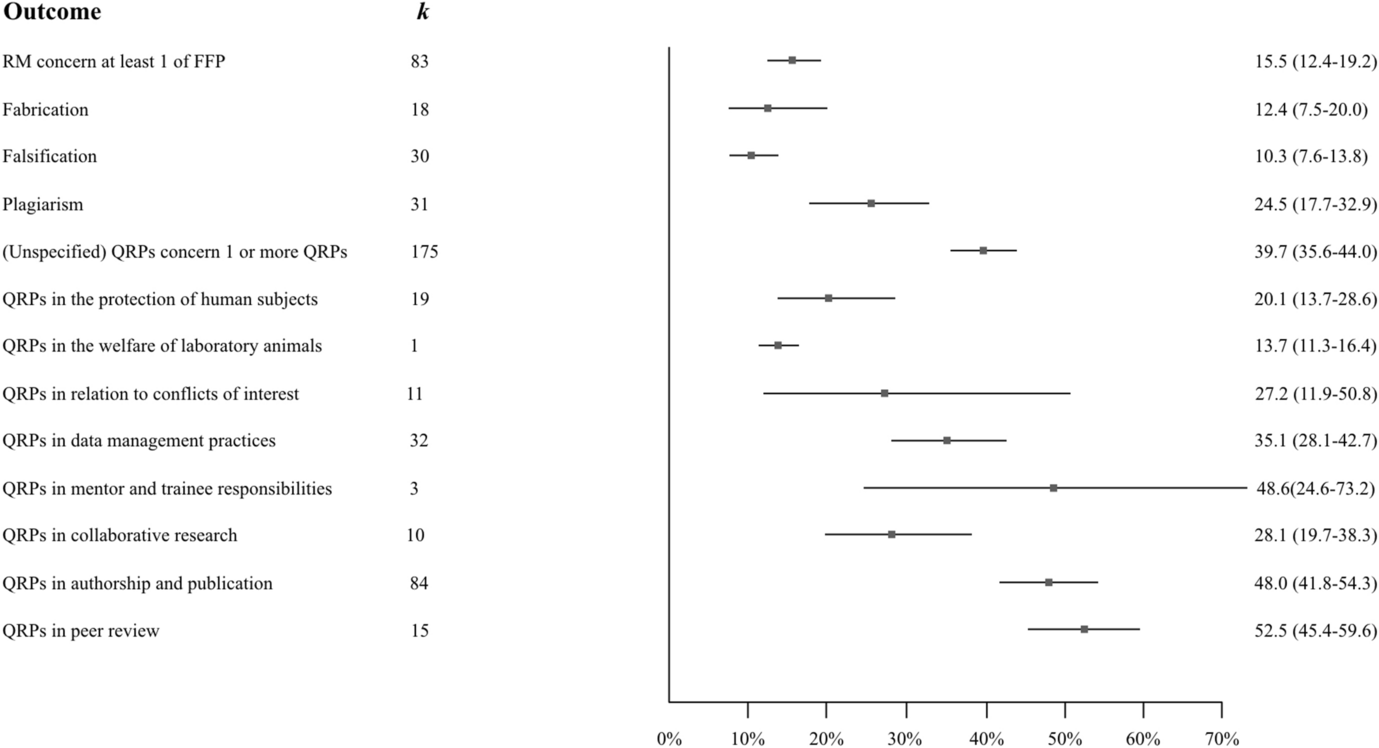

Irresponsible research practices damaging the value of science has been an increasing concern among researchers, but previous work failed to estimate the prevalence of all forms of irresponsible research behavior. Additionally, these analyses have not included articles published in the last decade from 2011 to 2020. This meta-analysis provides an updated meta-analysis that calculates the pooled estimates of research misconduct (RM) and questionable research practices (QRPs).

The estimates, committing RM concern at least 1 of FFP (falsification, fabrication, plagiarism) and (unspecified) QRPs concern 1 or more QRPs, were 2.9% (95% CI 2.1–3.8%) and 12.5% (95% CI 10.5–14.7%), respectively.

Self-reported prevalence

Figure 2 from Xie et al. (2021)

Observed prevalence

Figure 3 from Xie et al. (2021)

Your thoughts?

Note

What should we make of the Xie et al. (2021) finding that observed prevalence of QRPs is greater than self-reported prevalence?

Are QRPs a problem? If so, how big a problem? If not, why not?

Next time

File drawer effect & Work Session: Alpha, Power, Effect Sizes, & Sample Size

- Read

- (Skim) (Rosenthal, 1979). PDF on Canvas

- (Optional) (Franco, Malhotra, & Simonovits, 2014)

- Assignment

- Exercise 06: Alpha, Power, Effect Sizes, & Sample Size, final version due Wednesday, October 30

- Due

- Final project proposal

- Class notes